Can drones fly on their own without maps or extra parts? A new system shows how basic physics and small networks can make it possible.

Researchers at Shanghai Jiao Tong University have developed a new way to help drones navigate areas on their own, without relying on large or expensive components. This approach draws from insect movement and combines deep learning with basic physics principles, letting drones move through spaces without mapping or external control.

The proposed system instead uses an end-to-end neural network that takes raw sensor data and directly gives control signals. This design mimics how insects move using few neural resources, without mapping or planning.

The system runs on a 12×16 depth map and still manages navigation. Though the resolution is low, the data gives enough cues for the ANN to guide a drone’s motion and avoid obstacles. Training was done in a simulator using simple shapes to create different environments. A physics engine was part of the training loop, making it possible to learn in single- and multi-drone settings. Other drones were treated as moving obstacles.

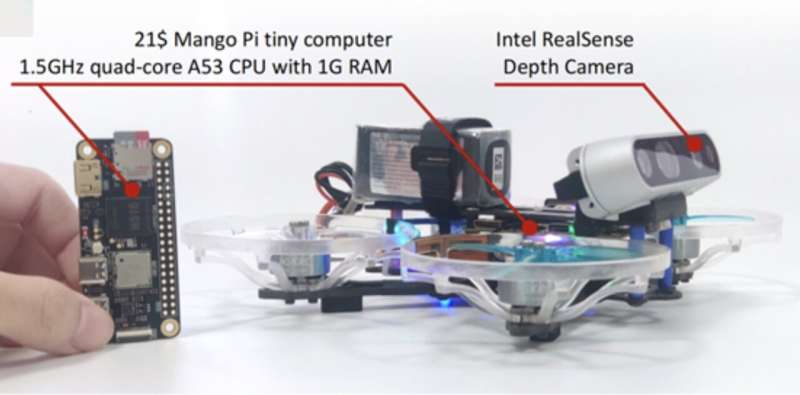

One strength of the system is its structure. It uses three convolutional layers and runs on a $21 computing board. Training takes two hours on a GPU. The model supports swarm navigation without planning or communication between drones, making it easy to scale.

Earlier deep learning models needed labeled data and often failed outside lab settings. This approach includes the drone’s physics model in training, which improves training speed and generalization to other settings, especially for movement and stability.

The researchers showed that small models can match or beat large models trained on big datasets. This questions the idea that more data is always better. Instead, using physical knowledge and well-matched training conditions may work better.

The artificial neural network (ANN), with under 2 MB of parameters, lets drones fly at 20 m/s using only depth input. This shows that strong internal models of physics can be more useful than high-detail sensors.

Though trained in simulation, the system showed wide generalization. It could support tasks like drone racing, filming, warehouse inspection, and search and rescue in GPS-limited areas. The study shows how simple neural networks trained with physics can support drone autonomy at scale.