A single camera is enough for the new model robots to use vision alone to guide motion, without needing the inputs of sensors.

A new AI-driven control method developed by MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) could transform how robots are trained and deployed. By relying on visual input rather than onboard sensors or complex modelling, this approach enables robots to learn movement and control autonomously, directly from their own observations.

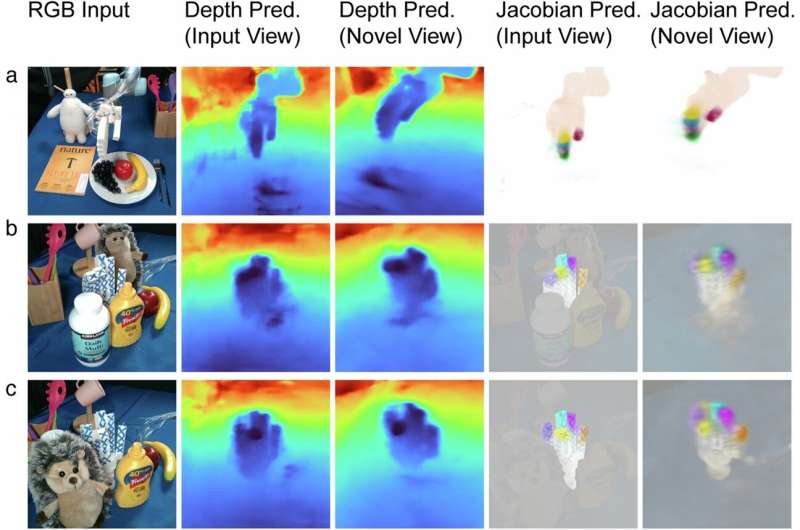

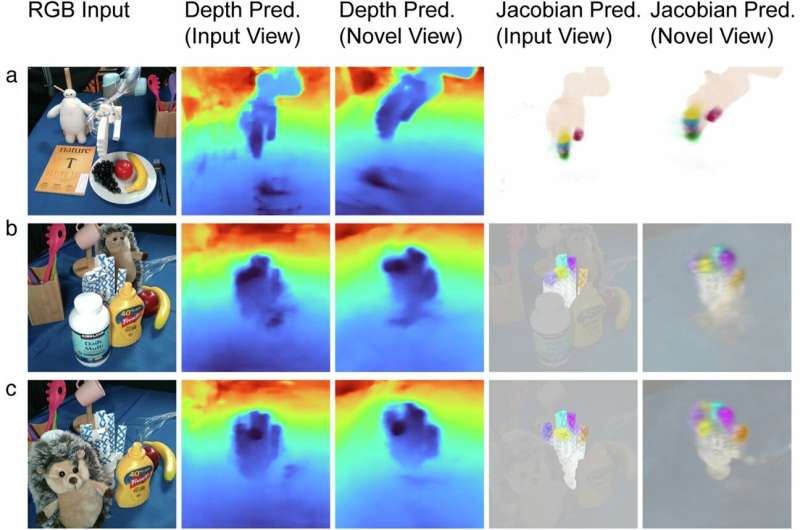

Dubbed Neural Jacobian Fields (NJF), the technique allows robots to map how their body parts respond to control signals, using only video footage of themselves in motion. A single camera is sufficient for real-time tracking and movement planning once the system is trained. This sidesteps the traditional need for digital twins or precise structural data, which are difficult to obtain for soft or irregular robots.

The robot builds an internal model linking motor signals to physical movements. Once this mapping is learned, the robot can plan and perform new tasks by optimising its movements toward a visual goal, gradually improving by correcting errors based on its own observations.

The research team successfully demonstrated NJF on a variety of platforms including soft grippers, rigid robotic hands, and sensorless rotating devices. In each case, the system deduced the robot’s shape and control dynamics without any embedded sensors or prior models.

NJF builds upon neural radiance fields (NeRFs), extending the idea to predict how each point on a robot moves in response to input. The method is trained through random motor actions recorded by multiple cameras, which are only needed during training. Afterwards, a single monocular camera enables feedback control at around 12Hz, outperforming many simulation-based methods in speed and responsiveness.

Although the system currently needs retraining for each robot and lacks tactile sensing, MIT researchers are working to extend its capabilities and reduce technical barriers. The long-term goal is accessible, adaptable robotics that learns as humans do, through sight and experience.